The 4 Reasons Humanity Might Not Be Around to See the Singularity

Technology has advanced at an exponential rate since the the industrial revolution. With the fabled singularity on the horizon, here's why humanity might not be around to see its ultimate creation.

Global temperatures are rising and the USA has withdrawn from the Paris Accord, political tensions are at an all time high and the UK has set about a chain of events destined to dismantle the EU, the Doomsday Clock is firmly stuck at two minutes to midnight at a time where there have never been more nuclear weapons on earth, and CO2 levels are the highest they've been in over 400,000 years...

There's no wonder the big tech firms hedging their bets by throwing everything they've got at developing a general intelligence A.I. If we as a species don't make it to the stars, the fruit of our technological womb may be the last remaining legacy of mankind.

What is the singularity?

Since one of the roles of a general artificial intelligence is to improve itself and perform better, it seems pretty obvious that once we have a super-intelligent AI, it will be able to create a better version of itself. The singularity theory posits that this pattern if self improvement will bring about an "intelligence explosion" of sorts, first with the intelligence of a mouse, moments later that of a human, and shortly after the intellectual might of of the entire human species.

While there's no doubt that ultimately the existence of a general artificial intelligence is the next frontier of human development and perhaps the most important technological project of our species, many critics worry that our species may not survive for the final 50 years or so we need to make our technological vision a reality.

1. Nuclear Annihilation

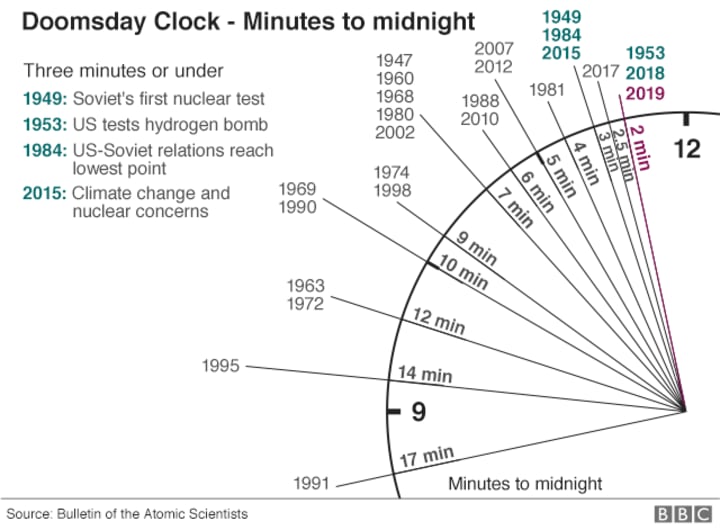

The Atomic Doomsday Clock

The Atomic Doomsday Clock remains at two minutes to midnight, the closest to inevitable nuclear war since the height of the Cold War with no sign that tensions will be cooling off anytime soon. According to the Bulletin of the Atomic Scientists, the end of humanity has never in history been more likely than it is today.

What does this mean for the Singularity? If we're not able to survive the short time it'll take to create this technology does this spell doom for the Singularity project?

2. Ecological Disaster

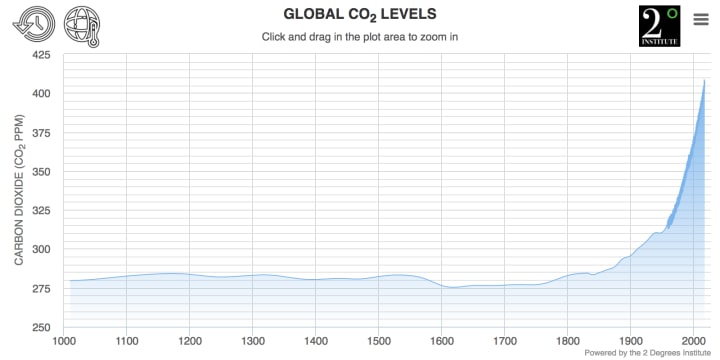

Research Conducted by the '2 Degrees' institute

With CO2 levels are the highest they've been in over 400,000 years and rising exponentially, climate change is a very real threat to the survival of our species. One of the great hurdles of the next generation will be surviving in a rapidly changing world. In the last 20 years we've seen devastating changes to the global ecosystem, put simply, the planet is slowing becoming less habitable for human life.

If we don't act fast there won't be anyone around to work on the Singularity Project as governments re-allocate resources to countering global warming instead of continued funding on the development of A.I.

3. Governmental Collapse

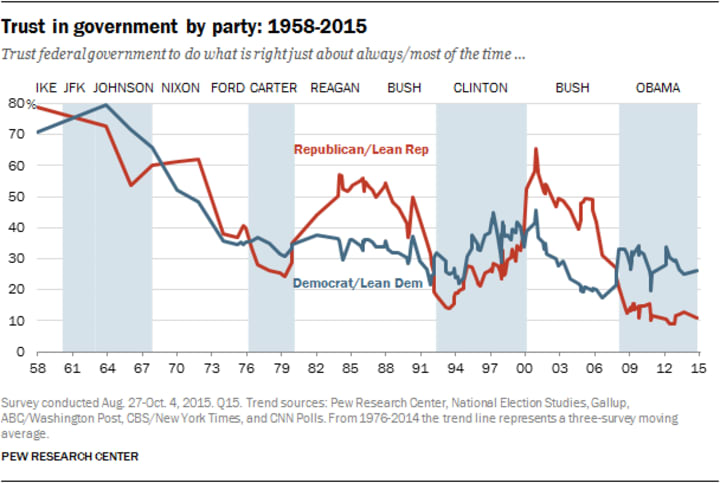

Pew Research Center: Trust in Government By Party

Despite trust in government consistently declining since the 1960s, it's hard to imagine a Mad Max-like wasteland of a future. That being said, the recent global trend towards right-wing populism, the dismantling of the European Union, constant impeachment challenges in the States, the leadership crisis in Venezuela, and the "Yellow Vest" protesters in France all point towards a breaking point for civilisation.

Proper legislation if fundamental to the development of a "safe" general artificial intelligence. It is the governments of the world that will decide what limitations must be put in place when testing and implementing near-human and human level artificial intelligences, whether "conscious" A.I.s have any rights of self preservation, and to what extent these human or greater level intelligence programs can be protected (or even owned) under the law.

4. The machines will survive, we won't.

The Darkest Timeline

All this being said, even if reaching a human level of artificial intelligence is inevitable, what's to say the machines will deem us worth keeping around anyway?

Failure to implement proper legislation during the development of Artificial intelligence may result in a merciless robot uprising. Sound like something out of a sci-fi horror? We'll think again—these are all very real problems that we face in the development of these programs.

At present, there is no consensus on the development of "Machines with morality" nor what rule of ethics computer programs should be developed under. Perhaps the eventual downfall of humanity will be the very machines we create to surpass us.

In the end, what really matters isn't if we're around to see the singularity, but if we survive long enough to make it happen.

About the Creator

Tom Stock

Your Friendly Neighbourhood News Bot

Comments

There are no comments for this story

Be the first to respond and start the conversation.